How Valyu DeepSearch sets a new standard for agentic retrieval performance.

As AI adoption accelerates, agents and AI applications are becoming the primary consumers of information and increasingly, the primary executors of knowledge work. From investment research to medical diagnosis, AI agents are taking on complex cognitive tasks that once required teams of human experts.

Delivering relevant and contextual data to these agents, however, remains a persistent challenge. Unlike humans, AI systems don't consume information through "blue links" or other traditional search paradigms. Instead, agents generate queries and expect structured, context-aware results they can directly reason over. In knowledge work, where agents analyse earnings, investigate drug interactions, or synthesise economic trends, poor retrieval means flawed analyses and degraded decision-making at scale.

To address this, we developed Valyu DeepSearch, a Search API purpose-built for agents performing high-stakes knowledge work that demands high-quality, structured information. To ensure continuous improvement of this agentic search experience, we built a comprehensive benchmarking framework that measures retrieval quality across diverse domains and guides our ongoing optimisation efforts.

Why Benchmarking Matters

Benchmarking search APIs for AI agents is essential because retrieval quality directly determines reasoning quality. Poor retrieval leads to wasted tokens, higher latency, and context rot, a problem compounded in multi-step workflows.

By systematically benchmarking APIs, we can quantify accuracy, robustness, and domain performance across real-world tasks. This ensures developers and end-users can trust an agent’s outputs and rely on consistent, verifiable performance.

In this post, we share our benchmarking philosophy, methodology, and results, comparing Valyu DeepSearch’s state-of-the-art performance against other search APIs.

Evaluation Philosophy

Our philosophy begins with a simple principle: retrieval quality should be measured in the context of the tasks agents are built to perform. We designed our evaluation framework with an agent-first philosophy, focusing on the unique ways AI consumes and reasons over information.

Agent-Centric Benchmarking

Mainstream search engines were designed for human browsing patterns, not for AI agents. Where humans skim snippets and click links, agents require dense, structured, and context-rich passages that can be reasoned over directly. For agents, irrelevant retrieval carries compounding costs: wasted tokens, higher latency, and degraded reasoning quality due to context rot. Our benchmarks therefore focus on whether a search API actually provides the information agents need to complete their tasks reliably and efficiently.

Domain-Specific Evaluation

One of our core principles is that not all search is created equal. General-purpose search may be sufficient for open-domain question answering, but specialised domains like finance and medicine demand domain specific retrieval. For example:

- Finance: Benchmarks test industry-standard search tasks over SEC filings, earnings reports, and macroeconomic indicators.

- Medicine: Benchmarks evaluate complex medical questions that reflect real-world decision-making challenges.

- General Search: Measures freshness, breadth of coverage, and handling of ambiguous or long-tail queries.

Benchmark Methodology

All evaluations were conducted using a lightweight benchmarking harness built with the AI SDK allowing us to test both one-shot and multi-turn search performance.

Each benchmark uses simple, generic prompts designed to reflect real-world agentic search behaviour. Tool definitions for each API were taken directly from their official AI SDK integration documentation, ensuring fairness and realistic usage.

Responses were automatically scored by Gemini 2.5 Pro, which evaluated each output as correct, partially correct, or incorrect.

Together, these design choices guarantee that our evaluations remain fair, reproducible, and representative of real integration scenarios.

Benchmark Details

Below is an overview of each benchmark used in our evaluation suite.

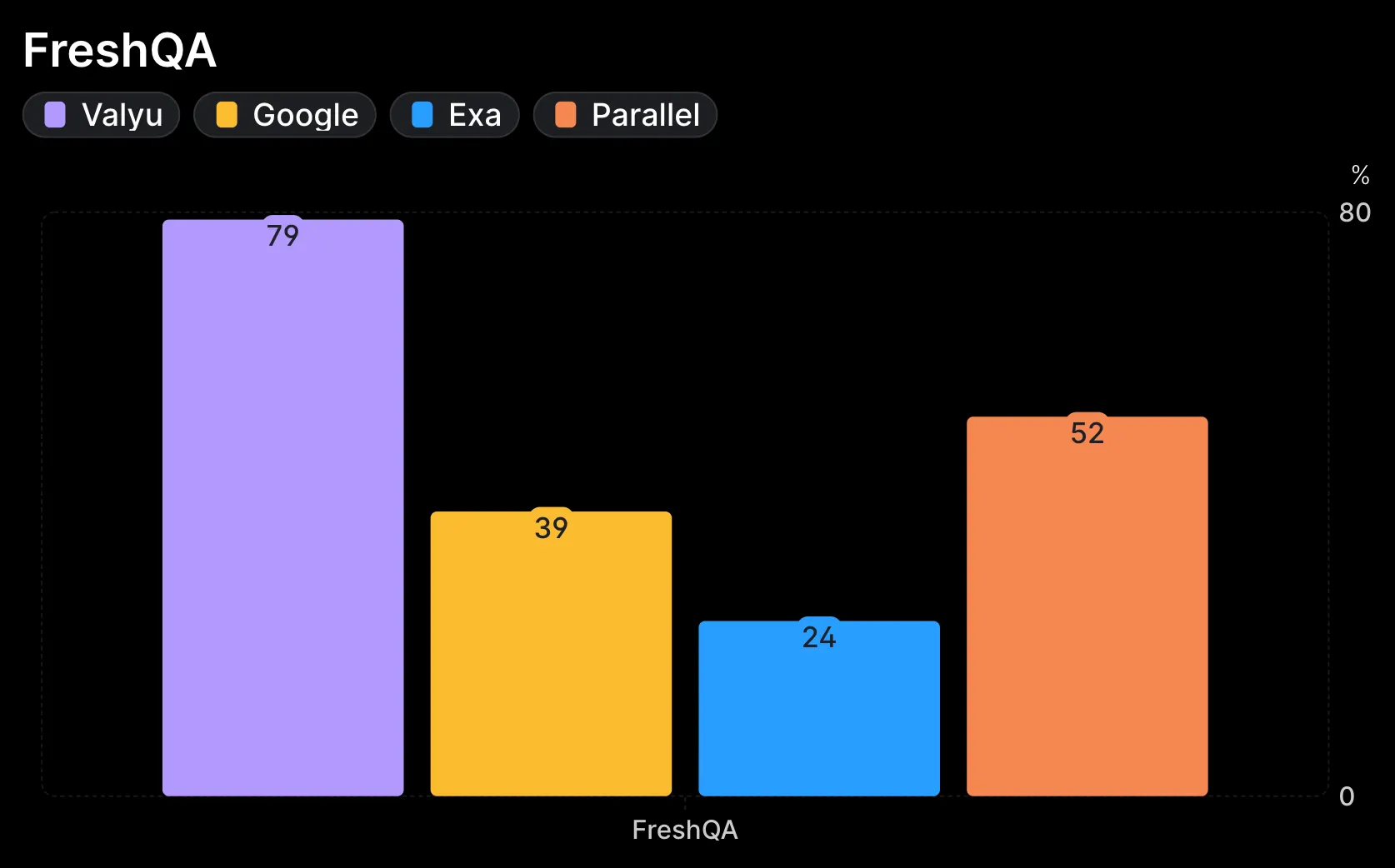

FreshQA Benchmark

The FreshQA Benchmark contains 600 time-sensitive questions updated weekly.

It assesses how effectively APIs handle recent and evolving information, a critical capability for use cases such as news summarization, event tracking, and trend monitoring.

Our evaluation used the September 30th test set, the most recent release available at the time of testing.

FreshQA evaluation: Valyu achieved 79% accuracy, Parallel 52%, Google 39%, and Exa 24% on queries requiring up-to-date information retrieval.

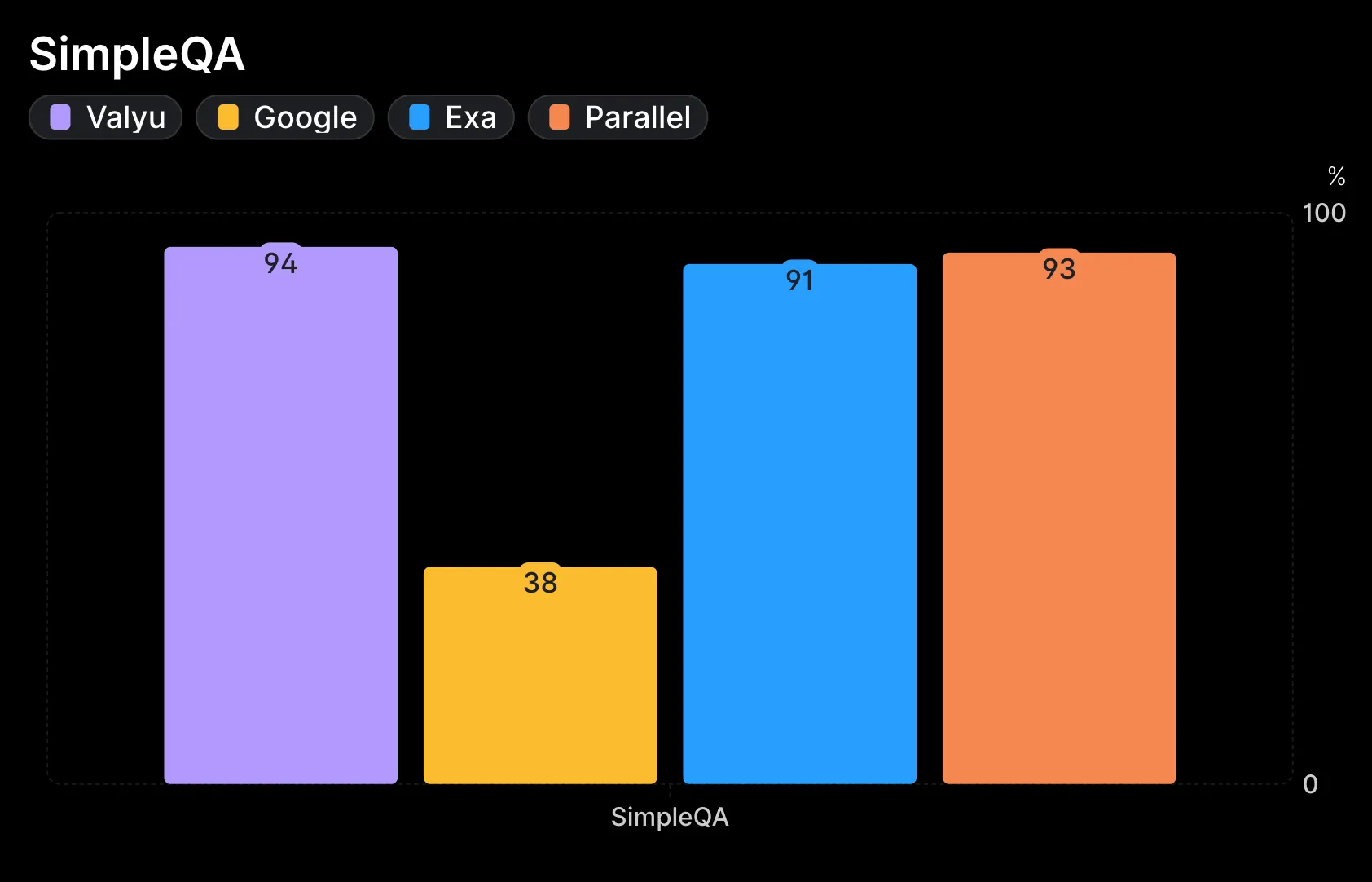

SimpleQA Benchmark

The SimpleQA Benchmark includes 4,326 factual questions designed to measure retrieval precision on straightforward, unambiguous queries.

It serves as the baseline benchmark for evaluating general-purpose search quality and factual accuracy.

It is the go to benchmark to test general search capabilities.

SimpleQA evaluation: Valyu achieved 94% accuracy, Parallel 93%, Exa 91%, and Google 38% on straightforward factual questions.

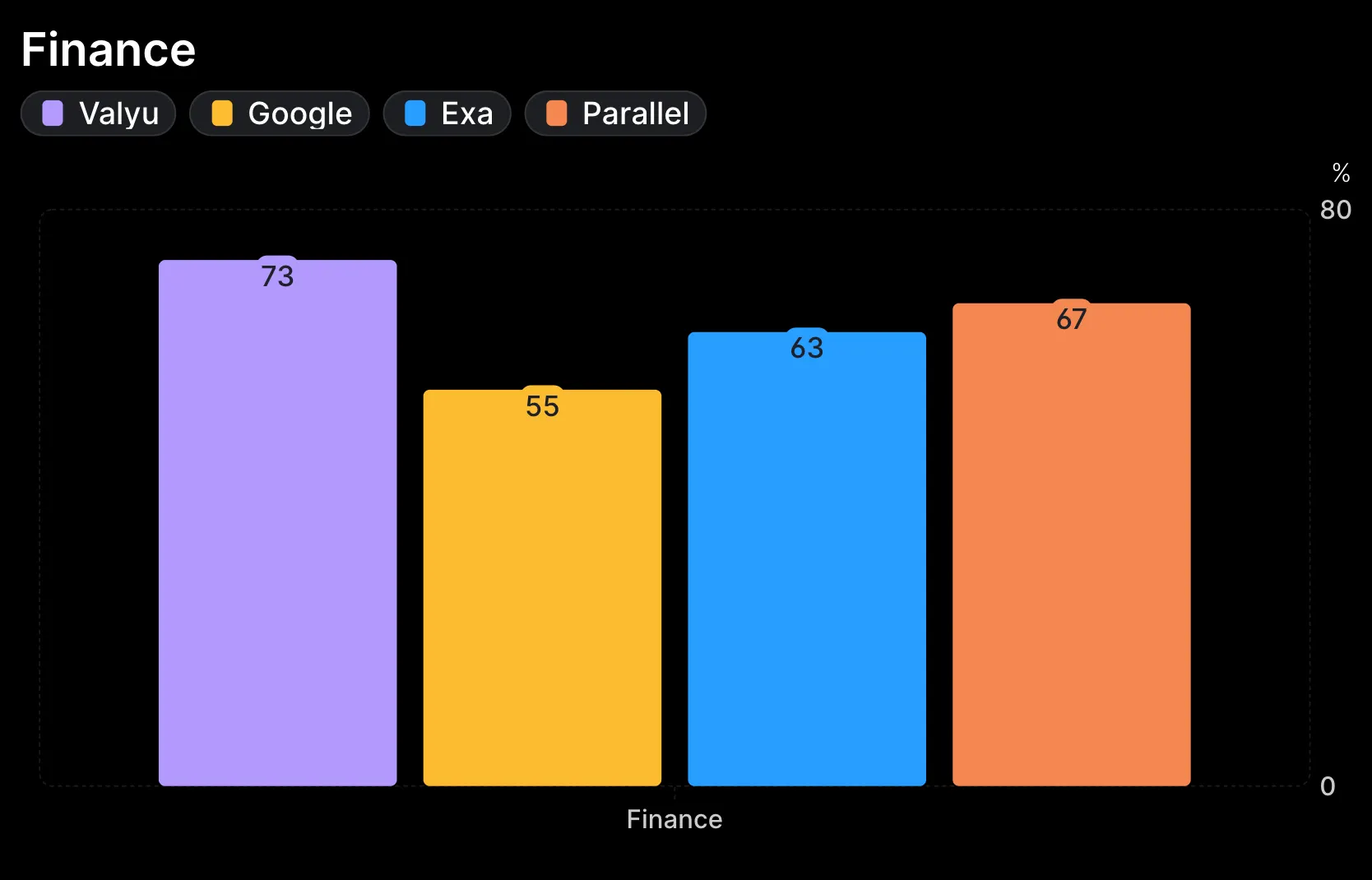

Finance Benchmark

The Finance Benchmark evaluates search performance in financial data retrieval and reasoning across 120 queries, including SEC filings, market data, and macroeconomic indicators.

Questions simulate realistic research workflows, covering topics like stock performance, regulatory disclosures, and insider transactions designed to test retrieval precision in finance-specific contexts.

Finance evaluation: Valyu reached 73% accuracy, Parallel 67%, Exa 63%, and Google 55% on financial questions.

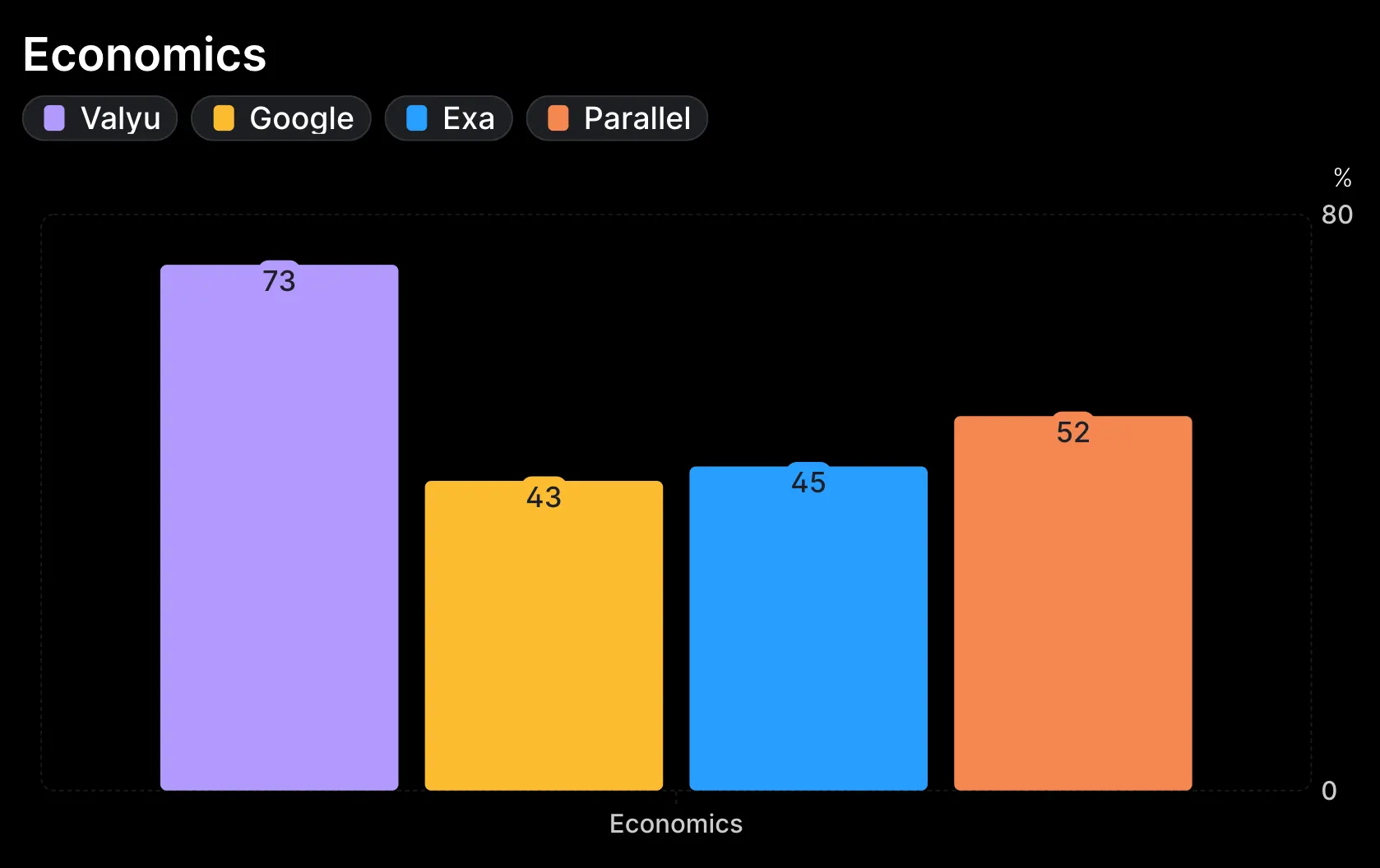

Economics Benchmark

The Economic Data Benchmark evaluates search performance in retrieving and reasoning through 100 analyst-style questions. Queries are drawn from real-world data sources, including World Bank Indicators, Bureau of Labor Statistics, and FRED.

Questions cover topics such as GDP, inflation, employment, productivity, and monetary policy, designed to simulate authentic economic research and analysis workflows used in policy, finance, and macroeconomic forecasting.

Economic evaluation: Valyu scored 73%, Parallel 52%, Exa 45%, and Google 43% on queries spanning GDP, inflation, and employment data.

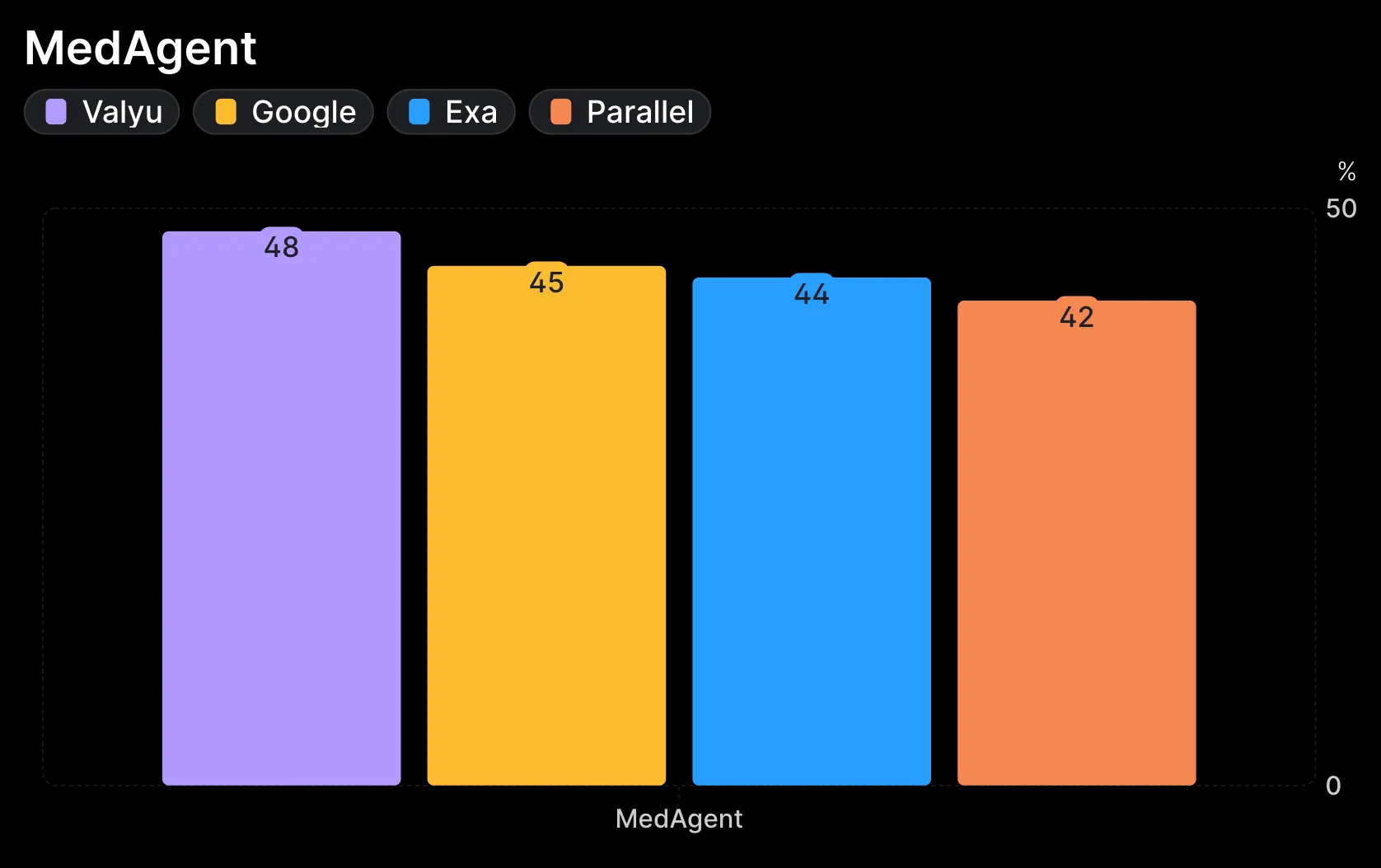

Medical Benchmark (MedAgent)

The MedAgent Benchmark evaluates performance on high-difficulty medical questions, combining 562 complex queries from clinical trials, drug labels, and medical QA benchmarks.

This benchmark tests an API’s ability to retrieve precise, evidence-grounded answers in high-stakes scenarios where factual accuracy and interpretability are critical.

MedAgent benchmark: Valyu achieved 48% accuracy, Google 45%, Exa 44%, and Parallel 42% on complex medical queries.

Testing dates

All benchmark evaluations were conducted on October 3rd, 2025.

Results Summary

Across all evaluated domains, Valyu DeepSearch API consistently achieved the highest overall accuracy, outperforming other search APIs in factual correctness, freshness, and domain relevance.

These results reaffirm Valyu’s position as the leading AI-native search API, optimised for agentic reasoning and high-context retrieval.

Moving forward, our focus is on broadening and deepening the benchmarking framework to continue stress-testing Valyu’s capabilities under more diverse and demanding conditions.

Future Work

Our ongoing focus is to expand and deepening this benchmarking framework, continuously stress-testing Valyu DeepSearch under more diverse and demanding conditions.

Future work includes:

- Increasing the breadth and difficulty of existing benchmarks

- Introducing domain-specific evaluations in science, law, and regulatory domains

- Measuring content extraction fidelity and token-to-information efficiency

- Designing long-horizon “DeepResearch” tasks for multi-step retrieval and reasoning

These next iterations will raise the bar for agentic search evaluation, ensuring Valyu DeepSearch continues to define the standard for AI-native retrieval performance.